1 Introduction

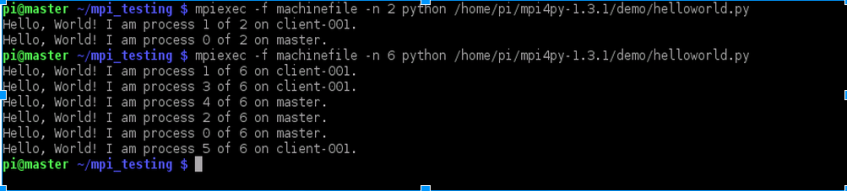

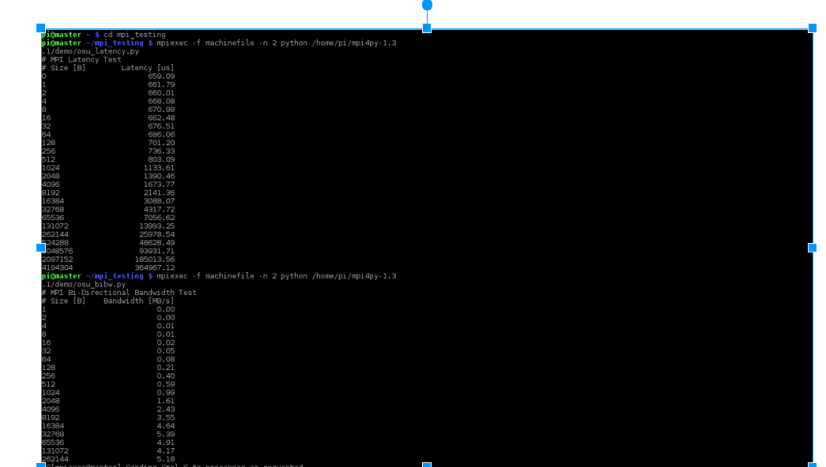

MPI stands for Message passing interface. An implementation of MPI such as MPICH” or OpenMPI is used to create a platform to write parallel programs in a distributed system such as a Linux cluster with distributed memory. Generally the platform built allows programming in C using the MPI standard. So in order to run Parallel programs in this environment in python, we need to make use of a module called MPI4py which means “MPI for Python”. This module provides standard functions to do tasks such as get the rank of processors, send and receive messages/ data from various nodes in the clusters. It allows the program to be parallely executed with messages being passed between nodes. It is important that MPIch2 and MPI4py is installed in your system. So, if you haven’t installed MPI4Py, following are 2 guides to refer to for installing, building and testing a sample program in MPI4PY.

https://seethesource.wordpress.com/2015/01/05/raspberypi-hacks-part1/ https://seethesource.wordpress.com/2015/01/14/raspberypi-hacks-part2/

Once MPI4PY is installed, you can start programming in it. This tutorial covers the various important functions provide by MPI4PY like sending-receiving messages, scattering and gathering data and broadcastingmessage and how it can be used by providing examples. Using these information, it is possible to build scalable efficient distributed parallel programs in Python. So, let’s begin.

2 Sending and receiving Messages

Communication in mpi4py is done using the send() and he recv() methods. As the name suggests, it is used to send and receive messages from nodes respectively.

2.1 Introduction to send()

The general syntax of this function is: comm.send(data,dest)

here “data” can be any data/message which has to be sent to another node and “dest” indicates the process rank of node(s) to send it to.

Example: comm.send((rank+1)*5,dest=1).

This sends the message “(rank+1)*5” to the node with process rank=1. So only that node can receive it.

2.2 Introduction to recv()

The general syntax of this function is: comm.recv(source)

This tells a particular process to receive data/message only from the process with rank mentioned in “source” parameter.

Example: comm.recv(source=1)

This receives the message only from a process with rank=1.

2.3 Example with simple send() and recv()

if rank==0 : data= (rank+1)*5 comm.send(data,dest=1) if rank==1: data=comm.recv(source-0) print data

(For full implementation program refer to Example1.py)

2.4 Notes

- When a node is running the recv() method, it waits till it receives some data from the expected source. Once it receives some data, it continues with the rest of the program.

- Here, the “dest” parameter in send() and “source” parameter in recv() need not have just a constant value (or rank), it can be an expression.

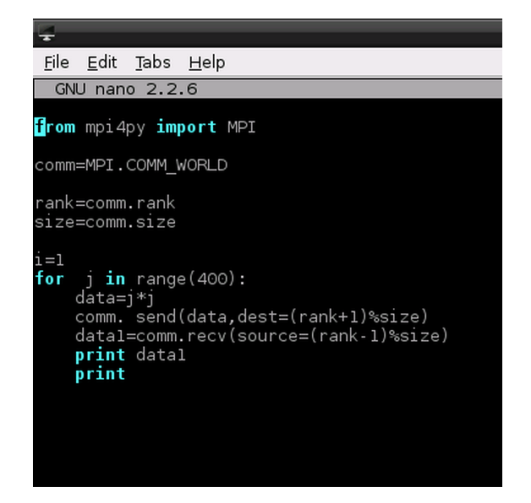

- The “size” member of “comm” object is a good way to conditionalize send() and receive() methods and this leads us to have dynamic sending and receiving of messages.

2.5 Sending and receiving dynamically

Dynamic transfer of data is far more useful as it allows data to be sent and received by multiple nodes at once and decision to transfer can be done depending on particular situations and thus this increases the flexibility dramatically.

2.6 Example of dynamic sending and receiving of data

comm.send(data_shared,dest=(rank*2)%size) comm.recv(source=(rank-3)%size)

The above two statements are dynamic because, the data to be sent and also who it has to be sent to depends on the value substituted by rank and size , which are dynamically happen and so this eliminates the need for hard-coding the values. The recv() method, however, receives only one message even though its qualified to receive many of them, so only the first message it receives, it services and continues to the next statement in the program.

(for full implementaion refer to Example2.py)

3 Tagged send() and recv() functions

When we tag the send() and recv(), we can guarantee the order of receiving of messages, thus we can be sure that one message will be delivered before another

During dynamic transfer of data, situations arise where, we need a particular send() to match a particular recv() to achieve a kind of synchronization. This can be done using the “tag” parameter in both send() and recv().

For example a send() can look like : comm.send(shared_data,dest=2,tag=1) and a matching recv() to the above statement would look like: comm.recv(source=1,tag=1)

So, this structure forces a match, leading to synchronization of data transfers. The advantage of tagging is that a recv() can be made to wait till it receives data from a corresponding send() with the expected tag. But, this has to be used with extreme care as it can lead to a deadlock state.

3.1 Example

If rank==0: shared_data1 = 23 comm.send(shared_data1,dest=3,tag=1) shared_data2 = 34 comm.send(shared_data2,dest=3,tag=2) if rank==3: recv_data1 = comm.recv(source=0,tag=2) print recv_data1 recv_data2 = comm.recv(source=0,tag=1) print recv_data2

The output of this would look like:

34 23

Thus, we can see that even though shared_data1 was sent first the first recv() waited for the send() with tag=2 and received the data , printed it and forwarded it to the next recv() method.

(For full implementations refer to Example3.py)

to view full post visit here.